AI Safety in Robotics: Turning Research into Real-World Trust

The question isn’t what AI can do—it’s whether we can trust it.

In the rapidly evolving field of robotics, ensuring AI safety isn't just a technical challenge; it's a fundamental necessity for building trust and enabling widespread adoption. As we look toward the future, events like the Stanford Center for AI Safety Annual Meeting 2025 serve as crucial platforms that bridge the gap between cutting-edge academic research and practical safety applications in real-world robotics.

At Saphira, our mission aligns with these goals—applying AI safety principles specifically within robotics to ensure systems operate reliably, safely, and transparently in complex environments.

Why AI Safety Matters in Robotics

Unlike purely software-based AI, robots interact physically with their surroundings—lifting, moving, navigating, and sometimes making autonomous decisions that impact human safety. This physical engagement amplifies risks related to liability, worker safety, and market trust. An unsafe robot can cause accidents, damage reputation, or hinder acceptance of robotic technologies.

Therefore, safety isn’t optional; it’s the foundational element for the successful deployment of robotics in industries such as manufacturing, healthcare, and logistics. Building systems that are safe by design encourages confidence among users, regulators, and investors.

From Research to Practice: Operationalizing AI Safety Principles

Academic research at Stanford emphasizes core themes like alignment, robustness, and trust—key to translating AI safety into tangible, deployable solutions.

Alignment: Ensuring Safe Robotic Behavior

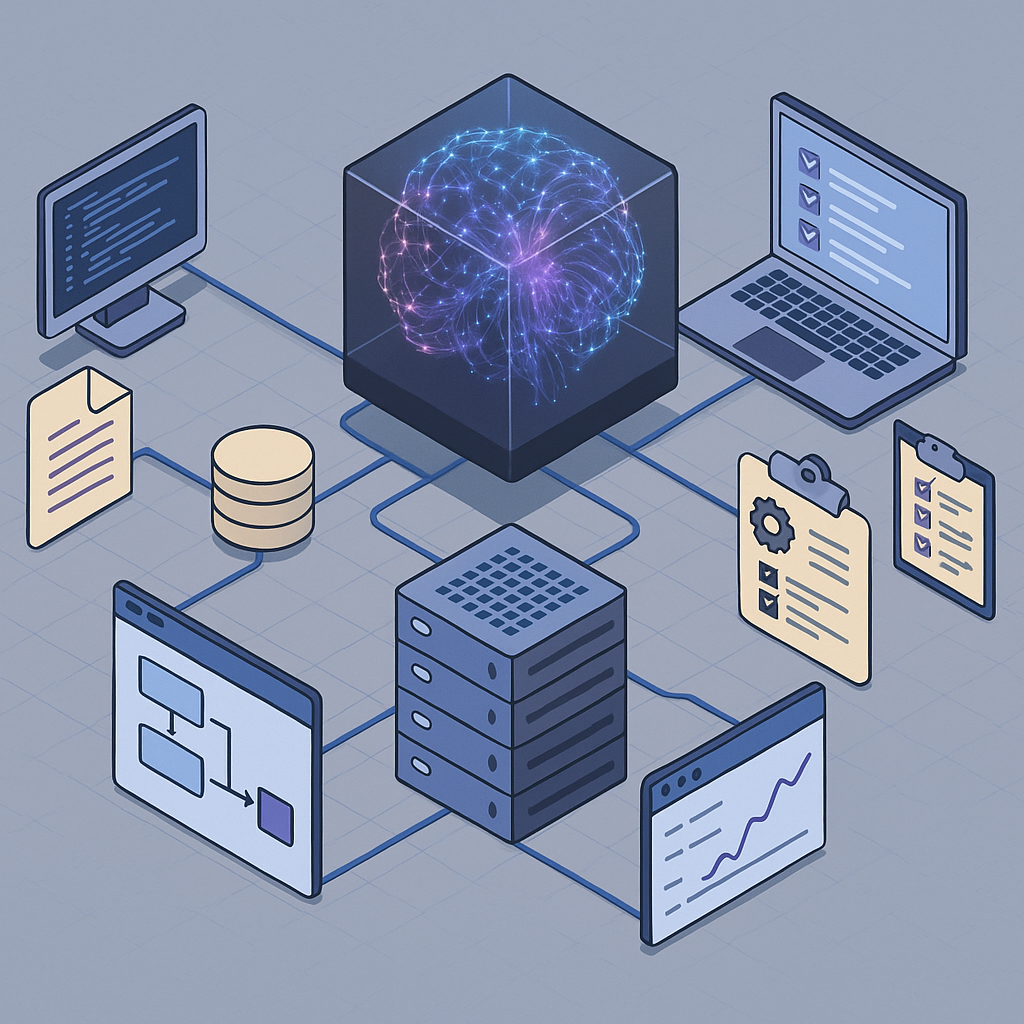

Saphira helps operationalize alignment by automating the structuring of safety standards into actionable requirements. We parse directives from standards like UL, CE, ISO, and TÜV, converting them into clear, traceable tests tailored to each robotic system. This ensures robots behave safely even in unpredictable environments.

Robustness: Handling Edge Cases

Robots must handle unforeseen scenarios reliably. Saphira’s platform identifies and tests edge cases by automating impact analysis—any change in design or environment automatically triggers reports on affected requirements and components. This continuous testing enhances robustness.

Trust: Certifiable Safety Artifacts

Trust is built through transparency and compliance. Saphira generates auditor-ready safety reports from existing engineering data, turning safety insights into certifiable artifacts that meet regulatory and investor expectations.

Case Study: 1X Technologies

Launching a humanoid robot involves complex safety challenges. 1X Technologies aimed to accelerate their go-to-market timeline while maintaining high safety standards.

Challenge: Rapid development without compromising safety or stakeholder trust.

Solution: Implementing Saphira’s modular safety framework, including risk analysis, safety documentation, and team alignment activities.

Impact: The result was a faster route to market, with stronger investor confidence and clearer safety validation. Toni Garcia of 1X Technologies emphasizes safety as a shared language that bridges engineering, regulation, and stakeholder communication.

Why Collaboration Matters

Achieving AI safety at scale requires close collaboration between academia, startups, and industry. Stanford’s research sets the vision, establishing fundamental safety principles, while Saphira translates these into operational practices that deliver tangible safety assurances.

Together, these efforts foster a safer, more trustworthy deployment of robotics, facilitating innovation that is both ambitious and responsible.

Conclusion

The journey from AI research to real-world trust in robotics hinges on rigorous safety practices, transparency, and collaboration. As events like the Stanford Center for AI Safety Annual Meeting highlight, aligning academic insights with practical tools—like Saphira—ensures that robotics not only advance technologically but do so with safety at the forefront. Trust in AI-driven robots becomes achievable when safety becomes a structured, automated, and shared priority.

By bridging the gap between research and application, we move closer to a future where robots are safe, reliable partners in our everyday lives.